Chinese startup DeepSeek's Generative AI model "R1" in January this year has caused changes in the AI ecosystem, with the stock price of NVIDIA, a leading AI computing company, plummeting. Broadcom, Oracle, and TSMC, the beneficiaries of the AI industry, also failed to avoid the plunge. How did DeepSeek's R1 raise questions about the market competitiveness of existing AI leaders? We will compare it with ChatGPT, which opened the door to the Generative AI era, and look at the differences, strengths, and influence of DeepSeek R1.

Is it the "innovation" created by Deficiency?

Surprisingly, DeepSeek is a startup that was founded less than two years ago. It is a subsidiary created in May 2023 by Quant Investment1) company High-Flyer, which specializes in researching AI. It is not that long, and a small company with fewer than 200 employees is surprising the world by launching a model that performs almost on par with OpenAI's latest model, OpenAI-o1-1217.

It was a common belief that Generative AI could only be created by learning with high-end GPUs at a heavy labor and trillions of high cost. According to Stanford 2024 AI Index Report, Google's Gemini Ultra cost about W2.7 trillion in Korean and OpenAI's ChatGPT-4 cost about W1.1 trillion. However, DeepSeek claims to have spent about W8 billion. (If true, it only used about one-eighth of OpenAI's ChatGPT-4.) The development cost was low because DeepSeek mainly used low-end GPUs. Due to the U.S. export restrictions, DeepSeek was forced to use low-end, low-end H800s because it could not freely use Nvidia's high-performance GPUs, H100 and H200. Major U.S. AI models are known to use about 16,000 high-end GPUs. DeepSeek is said to have trained the R1 model with thousands of low-end GPUs.

As for DeepSeek R1's high performance with low-end GPUs, experts estimate that the Mixed-of-Experts (MoE) architecture may have played an important role. MoE is a technique that brings together large language models (LLMs) specialized for a specific task, and then activates only the LLMs required for each task. DeepSeek R1 has 671 billion parameters, but 34 billion are designed to be activated for the task. Memory usage is low and task speed is fast because not all parameters are written at once. In short, it reduces AI learning costs by activating only a small dedicated LLM specialized for each task!

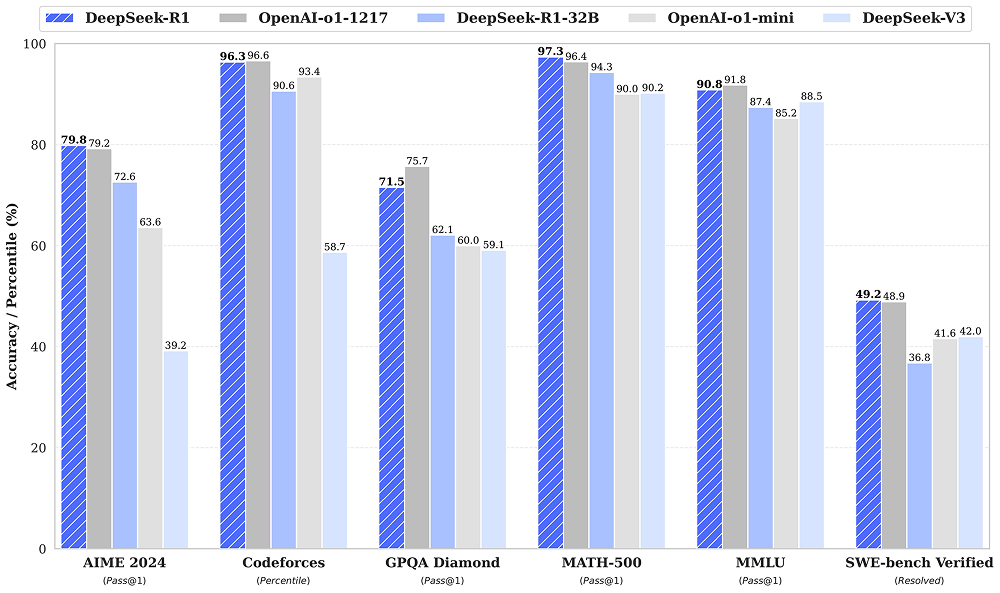

R1 on par with OpenAI's o1 model across multiple metrics (Source: DeepSeek GitHub)

Here, we will briefly look at the development of the DeepSeek-R1-Zero model that was unveiled simultaneously with R1. The Generative AI goes through supervised fine-tuning (SFT) to enable users to give the desired level of answers. It is a process of learning using a small collection of data composed of human-made questions and answers. Generating high-quality data requires a lot of manpower and cost resources. However, the R1-Zero model was developed with reinforcement learning (GRPO)2, which minimizes the SFT stage and improves the performance by itself. It is trained only with pure reinforcement learning (RL)3, not relying on human-made example data. It is known that AI has increased performance by applying "Accuracy Rewards," which gives additional points when finding the correct answer through inference. The DeepSeek-R1-Zero model, which was developed by relying on reinforcement learning instead of the costly SFT stage, unfortunately had a limitation of not being able to derive well natural language-friendly answers. R1 is a version that generates answers that people can understand well by initially learning from high-quality small-scale data on the R1-Zero model. DeepSeek, which created a low-cost, high-efficiency AI model within a short period of time, ranked No. 1 in the free downloaded app in the U.S. within a week of its release.

Similar to ChatGPT, but different DeepSeek R1

| |

ChatGPT |

DeepSeek R1 |

| common ground |

⁕ An interactive AI platform provides answers to text-based questions!

⁕ It can be used in many ways, including content creation, question answering, analysis, and coding!

⁕ It's targeted at users all over the world with its multilingual support such as English!

|

| Difference |

[Providing paid and free]

Significant performance differences exist between paid and free versions

|

[Free of charge]

Free version but similar performance to ChatGPT paid version

|

|

[No reasoning process provided]

The inference process of the derived answer is unknown

|

[Visualizing the reasoning process]

Reveal the reasoning process of deriving the answer / This makes it easy to modify the prompt

|

|

[Easy to connect and expand]

ChatGPT plug-in, API can be utilized

|

[Limited to expand]

It's not as scalable as ChatGPT

|

|

[Web friendliness]

It also offers a mobile UI, but it's more web friendly

|

[Mobile-friendly]

Easier and more optimized mobile experience than ChatGPT

|

|

[Investing in protective devices]

Invest a lot of resources in compliance, including security, and provide a variety of protections

|

[Worried about security]

Concerns over possible information leakage due to malicious purposes

|

① Chances are it's the best free tool!

DeepSeek's R1 can perform more complex and sophisticated tasks with parameters that exceed ChatGPT. In addition to general question-and-answer, its outstanding performance has been confirmed in a variety of tasks such as creative writing, editing, and summarizing. In terms of technical performance comparison, R1 handles 150 tokens per second, which is 1.2 times faster than ChatGPT-4, and can maintain conversation records of up to 8,192 tokens, so you can remember the context twice that of ChatGPT-4.

② Watch out for security vulnerabilities!

R1 is on the rise due to cost-effective AI, but there are growing concerns over security vulnerabilities. DeepSeek stated in its service's privacy policy that it collects user IDs, equipment names, IPs, and cookies and stores them on servers in China. European countries such as Italy, France, the U.K., and Germany are checking the status of collecting and processing personal information, and Korea's Personal Information Protection Committee has also announced that it will find out. (As of January 31, 2025)

③ Open model gives you a choice!

ChatGPT is a closed model whose development source is not open to the outside world, while DeepSeek is an open model. Core technologies such as R1 model weights and parameters have been released as an open source so that anyone can freely download and modify and improve them. You can expect specialized performance with additional training to download and run DeepSeek's LLM for free and fine-tune LLM. Since ChatGPT is impossible, it is highly likely to be used with interest in various industries.

The Wave of Change from DeepSeek

📌 Quick turnaround of big tech companies

Microsoft, Amazon Web Services (AWS), and META are known to use DeepSeek R1 to upgrade their services and model development. They have aggressively adopted R1 to reduce their dependence on ChatGPT developer OpenAI. According to ZoomInfo, a corporate data provider, replacing OpenAI o1 to DeepSeek R1 could reduce AI costs by two-thirds.

📌 Outlook for upward leveling of LLM

If companies can easily reach the performance of AI models at the level of open AI, market competition is likely to reach saturation. LLM is expected to be leveled up. If this happens, the differentiator of AI models is likely to shift to 'optimizing user experience'. It will be important to provide customized services using AI-specific customer data or to design a customer-friendly UI environment. It will also be able to increase competitiveness through flexible integration and combination with existing business models. Even with the same AI performance, it can provide a unique experience depending on how you give it a brand identity or sensibility.

📌 Competition of scale to tackle creativity and optimization

Without high-quality GPU resources, the possibility of developing world-class AI models with low-spec GPUs has been proven. The competition for AI technology is now beyond the competition of scale. In addition to securing unique and professional data, we must focus on securing optimized algorithmic technology that enables fast learning with fewer resources. In addition, creative thinking and innovative planning will become important in incorporating AI into business. It is not about securing a model, but about what value to create!

1) How to use math, statistics, and programming to develop asset management and investment strategies

2) Group Relative Policy Optimization

3) Improve the way you learn on your own through trial and error, and your behavior according to rewards

Subscribe to our newsletter